Terraform Collaboration and the Problem of Shared Environments

Working collaboratively with Terraform can be unexpectedly challenging — even when team members are making changes that seem mutually exclusive. Despite Terraform’s inherently idempotent nature, teams often find themselves in conflict when they share a single integration environment.

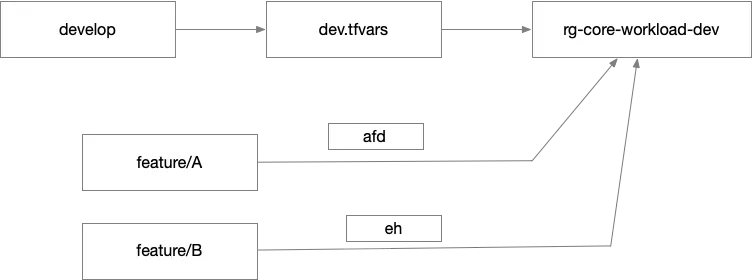

Consider two developers working on separate feature branches: one is adding an Azure Front Door, and the other is integrating Event Hub-based ingestion into Azure Data Explorer (Kusto). If they’re both working in the same environment — say, a shared “dev” integration environment — applying their changes becomes a zero-sum game. One developer runs terraform apply to provision the Front Door, while the other does the same to provision the Event Hub. But because Terraform builds a complete and consistent picture of infrastructure state from the configuration, each application attempt risks overwriting the other’s changes. The Front Door may be removed by the Event Hub deployment and vice versa, creating a frustrating tug-of-war.

This type of churn — where changes are inadvertently undone by others — isn’t the result of malicious intent, but of shared context and incomplete coordination.

The Mario Party Effect: Churn on a Shared Canvas

This dynamic recalls a mini-game in Mario Party where players, split into two teams, compete to cover a shared canvas with their team’s paint color. The canvas starts blank, but the goal isn’t just to fill empty space — it’s to cover more of your opponent’s color than they cover of yours. Players end up hopping around frantically, overwriting each other’s progress in pursuit of dominance.

In Terraform, developers don’t intentionally overwrite one another’s changes, but the result is similar. When two people apply different states to the same environment, they unintentionally undo the work of their teammates. The shared canvas metaphor holds up surprisingly well.

Why We Need Iterative Applies — and Why That’s Hard

Terraform encourages an iterative workflow. Developers typically want to run terraform apply repeatedly as they build out new features, testing along the way. But to do that, they need an integration environment where those resources can actually be provisioned. One approach is to use terraform apply -target=… to limit changes to only the relevant parts of the configuration. If the work is neatly encapsulated in a local module, targeting that module block can work well. However, this technique becomes unwieldy if the changes are scattered across files or touch shared resources. You might end up specifying a long list of targets, and even then, inline changes to shared resources may be undone or trigger conflicts. It’s easy to assume your features are isolated, but reality often proves otherwise.

Isolated Feature Environments as an Alternative

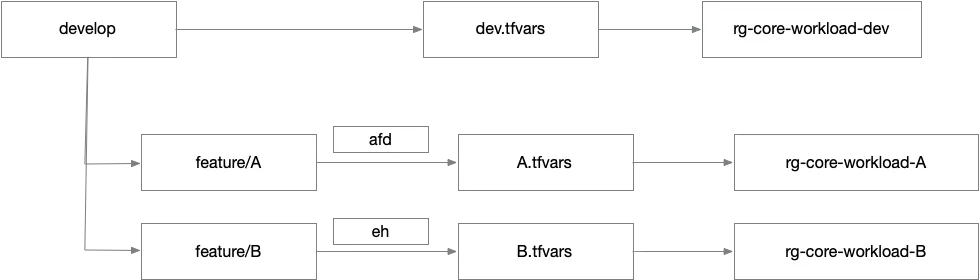

A more robust solution is to spin up isolated environments for each feature branch. Developers can replicate the shared dev environment’s .tfvars file to create a personal integration environment. In this environment, they are free to iterate without fear of interference. Once testing is complete and a pull request is approved, their changes are merged into the main or develop branch and deployed into the shared “dev” environment.

This model provides predictability and safety, but it might seem overengineered to developers accustomed to application development. In traditional app workflows, developers can run the application locally, test it in isolation, and iterate without touching shared infrastructure. Their local machine becomes the integration environment. With infrastructure as code, things are different. You can’t spin up a load balancer or cloud database locally — not in a way that resembles production. Instead, you need to go to the cloud platform and provision real resources. There’s no such thing as a truly local infrastructure environment. But thanks to Terraform, creating an additional integration environment is entirely feasible. That’s the whole point of infrastructure as code: repeatability and portability.

Layered Architectures and Vertical Slices

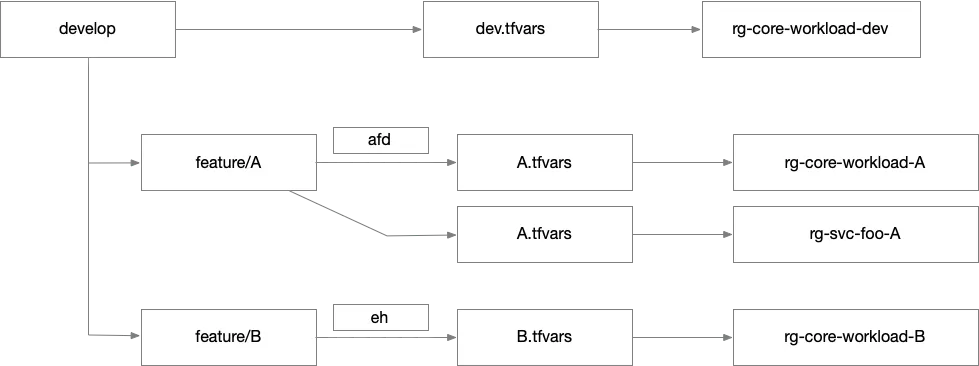

However, this solution works best when your infrastructure is relatively flat — when you’re working within a single root module. In more complex systems with layered infrastructure, things get trickier. Often, multiple root modules work together to form a complete system. A “core” module may set up shared resources, while other root modules build atop it to deploy features like Azure Functions, Event Hubs, or Front Door.

To test a feature like Azure Front Door end-to-end, you need more than just the Front Door module — you need downstream services it routes to. Those services may live in separate root modules. So effective testing often requires provisioning a complete vertical slice: the core module plus one or more downstream modules.

Without this full context, developers can’t validate that their Front Door correctly routes to a function, or that their Event Hub delivers messages to the right consumer.

Conclusion

In Terraform-driven workflows, the idea of isolation isn’t a luxury — it’s a necessity. Shared environments create conflict, even when developers work in good faith on non-overlapping changes. To preserve speed, confidence, and correctness, teams must provide isolated environments where developers can iterate freely.

This isn’t about avoiding collaboration — it’s about enabling it. With isolated feature environments and a thoughtful approach to layered stacks, Terraform teams can preserve the integrity of their shared environments while still building rapidly and independently.