Building Meaningful Terraform Test Workflows

Testing infrastructure with Terraform is often reduced to syntax checks and resource validation, missing the deeper question: does the deployed environment actually work?

For Azure Function Apps, meaningful assertions require more than checking that a resource group exists or that a Function App was created. You need to validate that your application code is deployed, your triggers are working, and your endpoint responds correctly under real-world conditions.

This article demonstrates how to build a practical, reliable terraform test workflow that ties together CI build artifacts, infrastructure provisioning, application deployment, and live endpoint validation into a single, automated pipeline. By using a simple .NET Azure Function as a test workload, you gain a living, maintainable test harness that not only proves your Terraform infrastructure works but also confirms that your Azure Function App is functional after deployment.

Azure product teams should consider using this approach to create living documentation for their services, showcasing end-to-end scenarios that remain relevant and usable, rather than providing static, disconnected samples that quickly become obsolete. This shift would reduce long-term operational friction for customers, enabling more confident, automated adoption of Azure services at scale.

Here is the code where you can see how I did it:

https://github.com/markti/automation-tests-azure-functions

№1 Verify Deployment Package

Before any infrastructure is provisioned or resources are consumed in Azure, it is essential to verify the presence of the .NET deployment package. Without this package, the pipeline would proceed to spin up cloud resources that cannot be utilized, wasting time, consuming quota, and generating unnecessary cloud costs. To prevent this, I included a setup verification step in the test harness.

How the Check Works

Using Terraform’s local_sensitive_file data source, I check for the existence and readability of the .NET deployment package (typically a dotnet-deployment.zip produced by your CI build pipeline):

data "local_sensitive_file" "dotnet_deployment" {

filename = var.deployment_package_path

}

This reads the contents of the file securely into memory if it exists. If the file is missing or inaccessible, Terraform will return an empty or invalid state, which we capture in the next step.

Test Harness Setup Step

The actual test run for this verification looks like:

run "setup" {

module {

source = "./testing/setup"

}

variables {

deployment_package_path = var.deployment_package_path

}

providers = {

azurerm = azurerm

}

assert {

condition = length(data.local_sensitive_file.dotnet_deployment.id) > 0

error_message = ".NET Deployment Package must be available"

}

}

A Typical Workflow

- Your CI pipeline produces dotnet-deployment.zip containing your Azure Function App build artifacts.

- This file is stored in your workspace or a known path (./dotnet-deployment.zip).

- The setup step in your Terraform test harness checks for the existence and readability of this file before proceeding to any infrastructure provisioning.

- If the file is missing, the pipeline fails immediately.

- If the file is present, the test harness continues to infrastructure provisioning, confident that the deployment artifact is ready for the subsequent deployment step.

№2 Provision Infrastructure

After verifying that the .NET deployment package exists I moved on to provisioning the Azure infrastructure required to host the Function App.

This step uses the Terraform root module (flex-baseline) to provision:

- An Azure Resource Group for environment isolation.

- A Service Plan (using Flex Consumption or other SKUs) that will host the Function App.

- A Storage Account and container to back the Function App, necessary for function runtime storage and logs.

- The Function App itself, configured with runtime settings, memory, instance limits, and linked to the service plan and storage account.

By encapsulating these resources in the flex-baseline module, I keep the test structure clean and reusable while ensuring that every test spin-up has its own isolated, reproducible Azure environment.

The provisioning step in the test harness looks like this:

run "provision" {

command = apply

module {

source = "./src/terraform/flex-baseline"

}

variables {

}

providers = {

azurerm = azurerm

}

assert {

condition = length(azurerm_resource_group.main.name) > 0

error_message = "Must have a valid Resource Group Name"

}

}

№3 Deploy Function App Code

Provisioning the infrastructure is only half the job; the next critical step is to deploy the actual function code to the newly created Azure Function App. For this, I use a null_resource with local-exec to push a zipped .NET deployment package using the Azure CLI.

This approach keeps the Terraform pipeline infrastructure-centric while enabling a clean hook for deploying application code without introducing a separate deployment tool, maintaining control in the same pipeline.

Deployment Step Structure

The deployment is orchestrated in the test suite as:

run "deploy" {

module {

source = "./testing/deploy-azure-fn"

}

variables {

function_app_name = run.provision.function_app_name

function_app_resource_group = run.provision.resource_group_name

deployment_package_path = var.deployment_package_path

}

providers = {

azurerm = azurerm

}

assert {

condition = length(null_resource.publish.id) > 0

error_message = "Null Resource Should be OK"

}

assert {

condition = length(data.azurerm_function_app_host_keys.main.default_function_key) > 0

error_message = "Function Key should be OK"

}

}

The Deployment Command

Inside the module, the null_resource executes:

resource "null_resource" "publish" {

provisioner "local-exec" {

command = <<EOT

az functionapp deployment source config-zip -g ${var.function_app_resource_group} -n ${var.function_app_name} --src ${var.deployment_package_path}

EOT

}

}

Here:

-

-gspecifies the resource group. -

-nspecifies the function app name. -

--srcpoints to the zipped deployment package, typically produced by your CI/CD build pipeline (e.g., a GitHub Actions or Azure DevOps pipeline generating dotnet-deployment.zip).

This command ensures your latest application build is deployed to the provisioned Function App, allowing immediate validation.

Retrieving Function Host Keys

Immediately after deployment, I retrieve the Function App host keys using the azurerm_function_app_host_keysdata source:

data "azurerm_function_app_host_keys" "main" {

name = var.function_app_name

resource_group_name = var.function_app_resource_group

}

These host keys are critical for:

- Calling the function during the health check validation step.

- Enabling integration tests that require authenticated access to the deployed function endpoints.

Skipping this deployment step would leave the Function App provisioned but empty, failing to validate whether your .NET isolated function code executes correctly in Azure. By including this deployment step:

- You test end-to-end deployment, not just infrastructure scaffolding.

- You ensure function triggers and bindings configured in your app code are recognized by Azure.

- You can confidently validate the runtime environment using real invocation checks immediately after deployment.

№4 Health Check the Endpoint

Provisioning the infrastructure and deploying code are necessary steps, but they are not sufficient to confirm a working Azure Function App deployment. To truly validate that the deployed function is operational, I added a live HTTP checkagainst the endpoint.

This test ensures that the Function App is not only provisioned correctly but is actively running the deployed .NET code, returning the expected response under real-world conditions.

Here’s the structure of the health check:

run "healthcheck" {

module {

source = "./testing/healthcheck-azure-fn"

}

variables {

endpoint = "https://${run.provision.function_app_default_hostname}/api/Function1?code=${run.deploy.function_key}"

}

providers = {

azurerm = azurerm

}

assert {

condition = data.http.endpoint.status_code == 200

error_message = "Function Endpoint should be OK"

}

assert {

condition = data.http.endpoint.response_body == "Welcome to Azure Functions!"

error_message = "Function Endpoint should return the correct content"

}

}

This step deliberately hits the deployed function code at the api/Function1 endpoint using the function key obtained during deployment. Without this step, the test could pass while the function itself is broken, misconfigured, or entirely missing.

The endpoint URL looks like this:

https://${run.provision.function_app_default_hostname}/api/Function1?code=${run.deploy.function_key}

It uses the default hostname of the provisioned Azure Function App and appends the function key for authorization, ensuring the call replicates how the client or system would invoke the function in production.

Expected Behavior from the Function

The deployed .NET isolated function is simple but explicit in what it returns, allowing the test to remain deterministic:

[Function("Function1")]

public IActionResult Run([HttpTrigger(AuthorizationLevel.Function, "get", "post")] HttpRequest req)

{

_logger.LogInformation("C# HTTP trigger function processed a request.");

return new OkObjectResult("Welcome to Azure Functions!");

}

When the health check hits this endpoint with a GET request, it expects an HTTP 200 status code with a response body of:

Welcome to Azure Functions!

This confirms that:

- The infrastructure is provisioned and reachable.

- The function code is correctly deployed and executed.

- The HTTP trigger is working under the expected authorization level.

Terraform performs this health check using the built-in http data source:

data "http" "endpoint" {

url = var.endpoint

method = "GET"

}

By asserting on data.http.endpoint.status_code and data.http.endpoint.response_body, the health check will fail the test suite immediately if the deployment is broken, misconfigured, or returns unexpected output.

This pattern is simple, fast, and integrates directly with your CI pipeline, providing a clean green/red signal for deployment validation. It also ensures:

- Your Terraform test is validating end-to-end deployment, not just infrastructure shape.

- Any misconfigurations in your storage connection, runtime, or app settings are caught immediately.

- The test remains reusable for any Function App endpoint supporting HTTP GET, as long as you pass in the correct endpoint and expected response body.

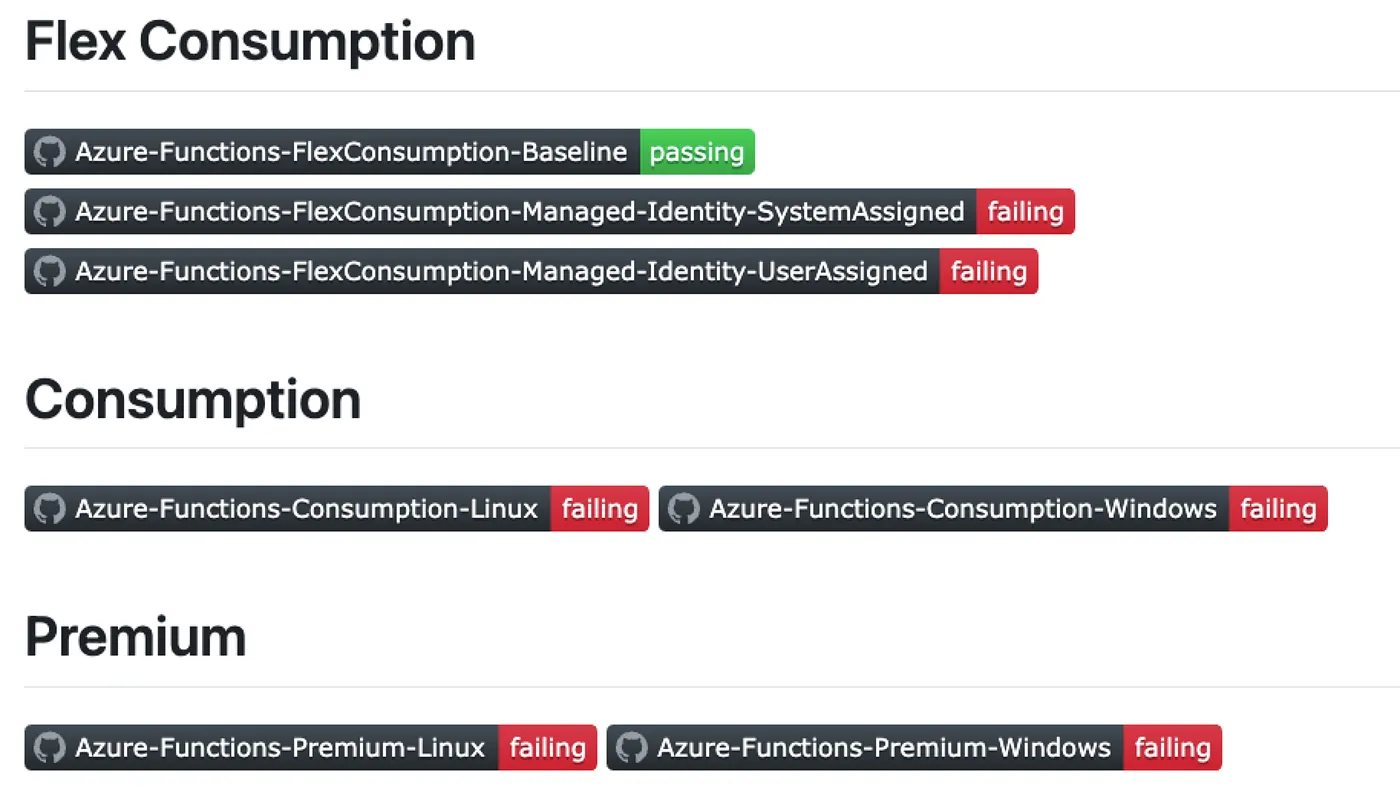

Where Flex Consumption Fails Today

While the above approach using a Storage Account Connection String worked, attempting to switch to Managed Identity immediately broke Flex Consumption deployments.

In a modern environment where secure identity practices are required, Flex Consumption currently blocks many CI/CD use cases, particularly for teams looking to automate infrastructure in Terraform end-to-end while adhering to security best practices.

Conclusion

This Terraform test workflow helped me systematically isolate where Flex Consumption fails and which Azure Function App deployment paths are still reliable in 2025.

If you’re looking to use Azure Functions Flex Consumption with Managed Identity, be aware that you will run into issues that are not apparent in the Terraform provider documentation or the Azure portal.

Until Microsoft addresses these gaps, either stick with Premium Plans (quota permitting) or prepare for less secure patterns if you must use Flex Consumption with Terraform. Of course you could try your luck with the AzAPI provider as well.