Managing Azure Front Door with Terraform Stacks

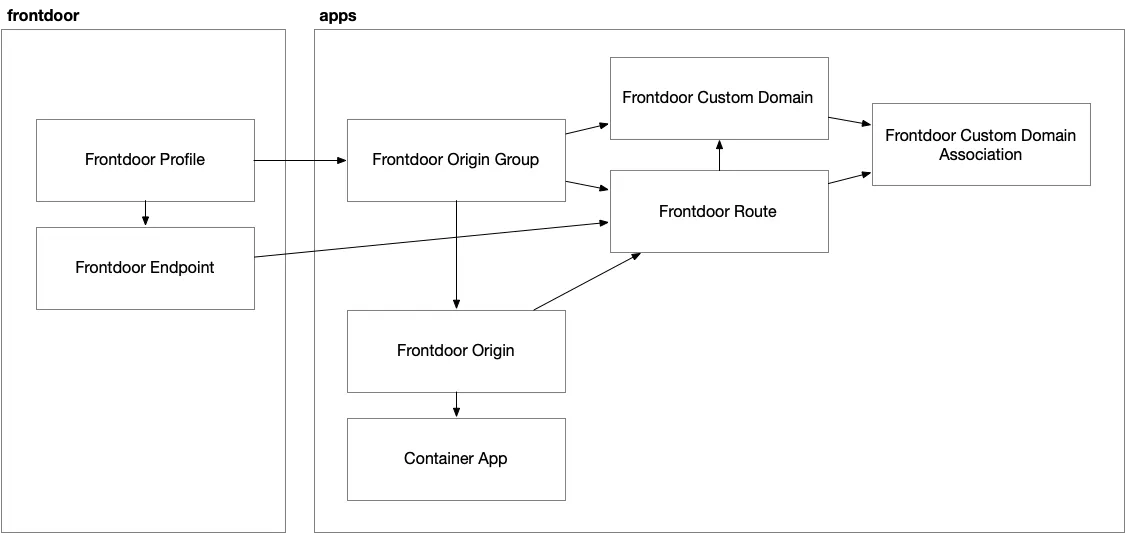

Configuring Azure Front Door using Terraform within a multi-environment, potentially multi-region deployment is not as straightforward as it initially seems. The service naturally straddles global and regional architectural boundaries — requiring thoughtful separation of global infrastructure (like the Front Door profile and endpoint) from region-specific application components (such as origins and routes).

This article documents how I approached Front Door setup using Terraform stacks. The process is still a work in progress, especially as I anticipate scaling out to a multi-region architecture. But along the way, I uncovered important considerations around domain validation, component modularization, and the relationship between Front Door’s configuration elements.

Isolating Global Components

To start, I created a separate Terraform component for setting up the core Front Door resources: the Front Door profile and its endpoint. This component currently lives under a module called frontdoor, although I may rename it to globallater to better reflect its scope.

This design decision aims to centralize the parts of Front Door that are shared across all application environments and potentially across multiple regional instances.

Region-Specific Configuration: Origins and Routes

For each application environment, I use a separate Terraform stack that includes the Front Door origin group, origin, and route. These elements connect the regional backend — typically an Azure Container App — to the globally defined Front Door endpoint.

Here’s how I configured the origin group, which represents a workload with its own health probe:

resource "azurerm_cdn_frontdoor_origin_group" "main" {

name = "default-origin-group"

cdn_frontdoor_profile_id = var.frontdoor.profile_id

health_probe {

path = "/"

protocol = "Http"

request_type = "GET"

interval_in_seconds = 100

}

load_balancing {

sample_size = 4

successful_samples_required = 3

additional_latency_in_milliseconds = 50

}

}

In this case, the health probe path is just /—a suitable check for a static React website. While it doesn’t offer deep insight into backend behavior, it suffices for checking if the container app is up. Next, the origin resource connects the backend container app to the origin group:

resource "azurerm_cdn_frontdoor_origin" "main" {

name = "default-origin"

cdn_frontdoor_origin_group_id = azurerm_cdn_frontdoor_origin_group.main.id

enabled = true

certificate_name_check_enabled = false

host_name = azurerm_container_app.frontend.ingress[0].fqdn

http_port = 80

https_port = 443

origin_host_header = azurerm_container_app.frontend.ingress[0].fqdn

priority = 1

weight = 1000

}

Then comes the route, which ties everything together:

resource "azurerm_cdn_frontdoor_route" "main" {

name = "default-route"

cdn_frontdoor_endpoint_id = var.frontdoor.endpoint_id

cdn_frontdoor_origin_group_id = azurerm_cdn_frontdoor_origin_group.main.id

cdn_frontdoor_origin_ids = [azurerm_cdn_frontdoor_origin.main.id]

enabled = true

supported_protocols = ["Http", "Https"]

patterns_to_match = ["/*"]

forwarding_protocol = "HttpOnly"

https_redirect_enabled = true

}

Right now, I only have one origin because the app is deployed in a single region. In a multi-region, active-active deployment, I plan to have an origin per regional stamp. Each region would get its own origin and route components, while sharing the global profile and endpoint.

Adding Custom Domains

To expose the app publicly under a domain like dev.example.com, I use the azurerm_cdn_frontdoor_custom_domainresource. This is set up once per workload and applies across all regions.

resource "azurerm_cdn_frontdoor_custom_domain" "main" {

name = "fdcd-${var.application_name}-${var.environment_name}"

cdn_frontdoor_profile_id = var.frontdoor.profile_id

host_name = var.frontend_domain_name

tls {

certificate_type = "ManagedCertificate"

}

}

Right now, I pass in the front end domain name to the deployment as a variable declared in variables.tfcomponent.hcl. Then I pass in the desired value to my deployment block.The frontend_domain_name is passed as a variable: deployment “dev” { inputs = { # …snip… frontend_domain_name = “dev.revoptix.com” # …snip… } } This allows me to spin up environments with domains like dev.contoso.com, demo.contoso.com, or whatever fits the domain strategy.

The Domain Association Hurdle

At this point, I tried to associate the custom domain to the Front Door route using:

resource "azurerm_cdn_frontdoor_custom_domain_association" "main" {

cdn_frontdoor_custom_domain_id = azurerm_cdn_frontdoor_custom_domain.main.id

cdn_frontdoor_route_ids = [azurerm_cdn_frontdoor_route.main.id]

}

However, this resulted in an error:

Error: creating Front Door Custom Domain Association: (Association Name “fdcd-ro-contract-engine-processor-dev” / Profile Name “afd-ro-contract-engine-processor-dev-do856ja4” / Resource Group “rg-ro-contract-engine-processor-dev-frontdoor”): the CDN FrontDoor Route(Name: “default-route”) is currently not associated with the CDN FrontDoor Custom Domain(Name: “fdcd-ro-contract-engine-processor-dev”). Please remove the CDN FrontDoor Route from your ‘cdn_frontdoor_custom_domain_association’ configuration block

Error:

on src/terraform/apps/frontend.tf line 165 , in ‘resource “azurerm_cdn_frontdoor_custom_domain_association” “main”’ :

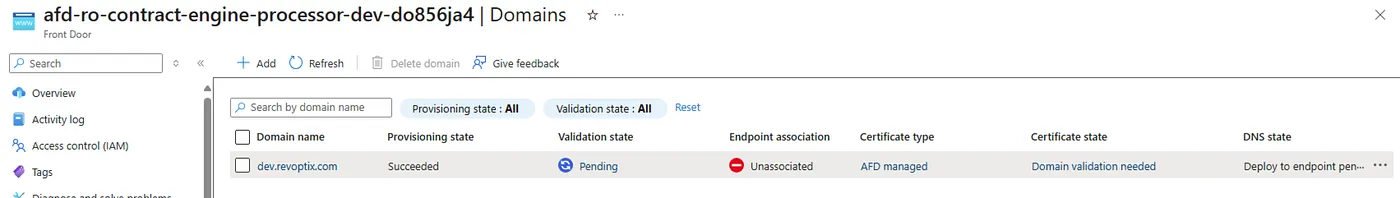

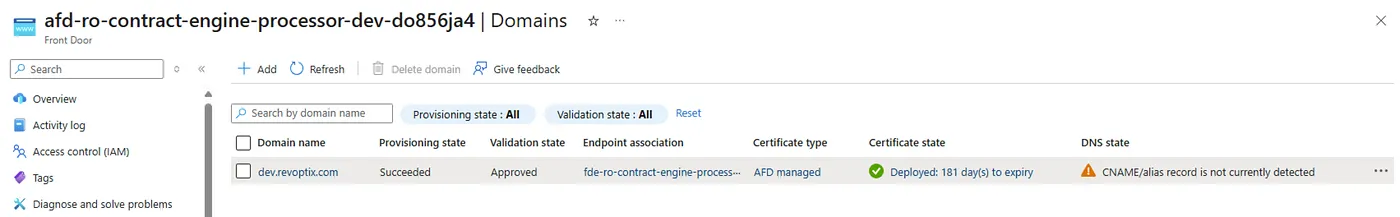

Removing this association resource, my deployment is successful and the portal looks like this:

This happens because the route must explicitly declare its own association with the custom domain. Without this, the standalone association resource fails.

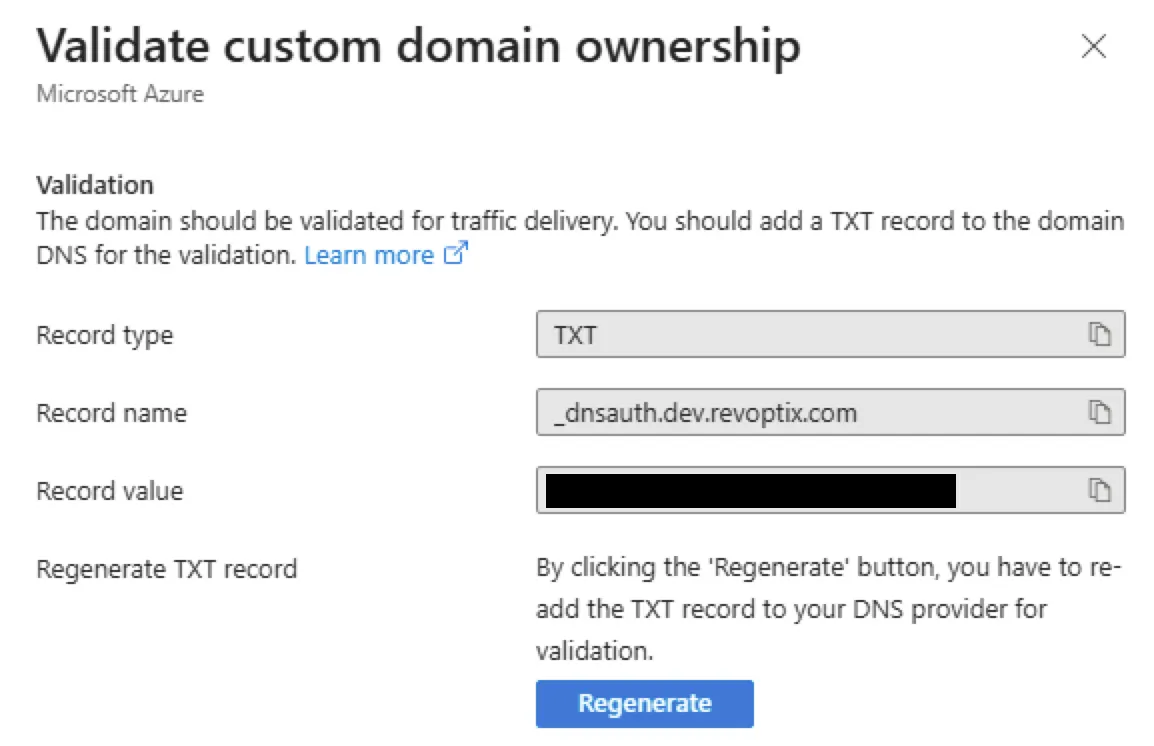

In the Azure Portal, I could see that the custom domain’s validation state remained Pending, and the endpoint association was marked Unassociated. Clicking on Pending reveals the required TXT record for domain ownership verification.

Note that depending on your DNS provider, you may need to extract just the prefix from the fully qualified record name. For example, using Dynadot, you’d typically enter only the subdomain.

Once the domain is verified — which may take hours — you also need a CNAME record pointing to the Front Door endpoint. The TXT record only proves domain ownership; the CNAME is required for actual traffic routing.

Correcting the Route Association

To fix the earlier error, I modified the route resource to include the custom domain ID directly:

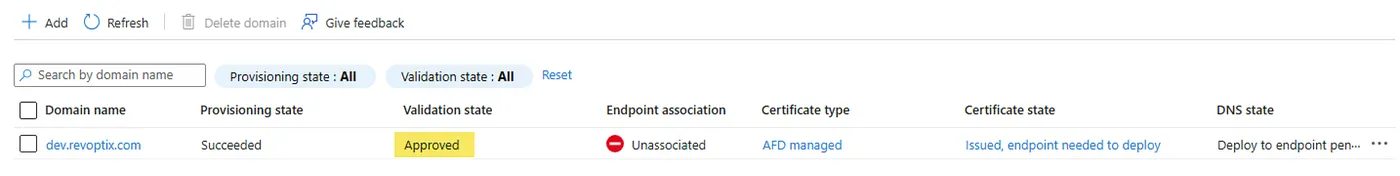

Once this was in place, the azurerm_cdn_frontdoor_custom_domain_association resource was no longer necessary—the route itself handled the domain binding. Now I’ll try to reassociate, but I am missing a really important thing. I need to have my route also associate itself with the Custom domain.

resource "azurerm_cdn_frontdoor_route" "main" {

name = "default-route"

cdn_frontdoor_endpoint_id = var.frontdoor.endpoint_id

cdn_frontdoor_origin_group_id = azurerm_cdn_frontdoor_origin_group.main.id

cdn_frontdoor_origin_ids = [azurerm_cdn_frontdoor_origin.main.id]

enabled = true

supported_protocols = ["Http", "Https"]

patterns_to_match = ["/*"]

forwarding_protocol = "HttpOnly"

https_redirect_enabled = true

cdn_frontdoor_custom_domain_ids = [azurerm_cdn_frontdoor_custom_domain.main.id]

}

Once I do that I am good to go with the association. Now I simply need to get rid of the “CNAME/alias record is not currently detected” error by also adding a CNAME record in addition to the TXT record.

The TXT record only validates that you own the domain name, it doesn’t actually facilitate DNS resolution to the Frontdoor endpoint.

Architectural Reflections

Currently, the setup follows this organization:

- A global component for the Front Door profile and endpoint.

- A regional stamp component for the origin group, origin, route, and custom domain.

However, I’m not fully satisfied with this model — especially as I begin planning for multi-region deployment. Both the origin group and custom domain might need to be shared across regions, making them less appropriate to live solely within the regional stamp.

A better structure might involve:

- Keeping profile, endpoint, custom domain, and origin group in a shared global layer.

- Treating origin, route, and custom domain association as region-specific resources.

This change would better reflect the architecture Front Door encourages: globally distributed routing with regional backend stamps.

Conclusion

Terraform makes it easy to stamp out environments, but Azure Front Door adds a layer of complexity due to its straddling of global and regional scopes. Configuring it correctly requires understanding how domains are validated, how routes must explicitly associate with domains, and how to organize components for scalability.

This setup is still evolving, especially as I prepare for multi-region deployments. But even now, it provides a solid, reusable foundation for deploying secure, domain-routed applications through Azure Front Door using Terraform.