Provisioning a VM on Proxmox with Terraform: Lessons from a Cloud-Init Deployment

Terraform offers powerful infrastructure automation, and when paired with Proxmox, it can enable rapid provisioning of virtual machines in a home lab or enterprise environment. However, integrating these two tools — especially when using cloud-init configurations — requires navigating some nuances around storage types, SSH access, and Proxmox node configuration. This article walks through a real-world setup, highlighting the roadblocks encountered and how they were resolved.

Starting Point: Downloading a Cloud Image with Terraform

The Terraform Proxmox provider supports various resource types, including downloading image files via Proxmox’s download-url API.

https://registry.terraform.io/providers/bpg/proxmox/latest/docs/resources/virtual_environment_download_file

Manages files upload using PVE download-url API. It can be fully compatible and faster replacement for image files created using proxmox_virtual_environment_file. Supports images for VMs (ISO images) and LXC (CT Templates).

A typical starting resource looks like this:

resource "proxmox_virtual_environment_download_file" "latest_ubuntu_22_jammy_qcow2_img" {

content_type = "iso"

datastore_id = "local"

node_name = "pve"

url = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

This resource pulls an Ubuntu cloud image from the official repository and stores it in the local datastore. However, integrating this with cloud-init through the proxmox_virtual_environment_file resource introduces new complexities.

Datastore Compatibility and Limitations

Attempting to use cloud-init via proxmox_virtual_environment_file initially resulted in the following error:

the datastore “local” does not support content type “snippets”; supported content types are: [backup iso vztmpl]

This indicated that the local datastore didn’t support snippets, the content type needed for cloud-init user data. To address this, I tried switching to local-lvm, which had significantly more space (~1.5 TB compared to local’s 120 GB):

resource "proxmox_virtual_environment_download_file" "ubuntu_cloud_image" {

content_type = "iso"

datastore_id = "local-lvm"

node_name = "pve"

url = "https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img"

}

This failed with:

Could not download file ‘jammy-server-cloudimg-amd64.img’, unexpected error: error download file by URL: received an HTTP 500 response — Reason: can’t upload to storage type ‘lvmthin’, not a file based storage!

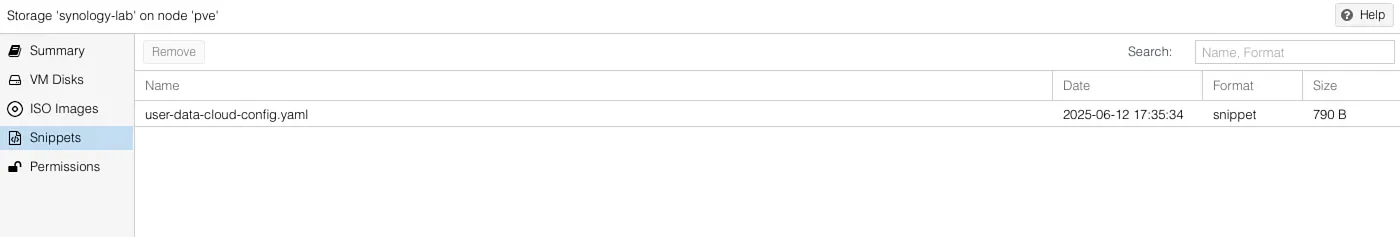

Proxmox’s local-lvm is backed by LVM-thin, which does not support file uploads. The solution was to use an NFS share, which I had already configured as synology-lab on my Proxmox server.

Introducing Cloud-Init with proxmox_virtual_environment_file

After switching to synology-lab, I proceeded to define a cloud-init snippet:

resource "proxmox_virtual_environment_file" "user_data_cloud_config" {

content_type = "snippets"

datastore_id = "synology-lab"

node_name = "pve"

source_raw {

data = <<-EOF

#cloud-config

hostname: test-ubuntu

timezone: America/Toronto

users:

- default

- name: ubuntu

groups:

- sudo

shell: /bin/bash

ssh_authorized_keys:

- ${trimspace(tls_private_key.main.public_key_openssh)}

sudo: ALL=(ALL) NOPASSWD:ALL

package_update: true

packages:

- qemu-guest-agent

- net-tools

- curl

runcmd:

- systemctl enable qemu-guest-agent

- systemctl start qemu-guest-agent

- echo "done" > /tmp/cloud-config.done

EOF

file_name = "user-data-cloud-config.yaml"

}

}

However, this resulted in a new error:

Error: failed to find node endpoint: failed to determine the IP address of node “pve”

Understanding SSH Requirements

The error stemmed from the way proxmox_virtual_environment_file operates—it requires SSH access to the Proxmox node. My initial provider block, which used an API token, was insufficient for resources that needed SSH.

Initially, I used:

provider "proxmox" {

insecure = true

}

It turns out this resource type is accessed using SSH.

The resource with this content type uses SSH access to the node. You might need to configure the ssh option in the provider section.

I hadn’t configured the ssh option in the provider block.

Because I was using an API Token for the root user — probably not the best idea — I needed to configure the provider block like this:

provider "proxmox" {

insecure = true

ssh {

agent = true

username = "root"

}

}

The first time I tried this, I accidentally omitted the username = “root” bit and that led to timeouts and authentication errors like this:

Error: failed to open SSH client: unable to authenticate user “” over SSH to “x.x.x.x:22”. Please verify that ssh-agent is correctly loaded with an authorized key via ‘ssh-add -L’ (NOTE: configurations in ~/.ssh/config are not considered by the provider): failed to dial x.x.x.x:22: dial tcp x.x.x.x:22: connect: operation timed out

However, it still came back with the following error:

Error: failed to find node endpoint: failed to determine the IP address of node “pve”

I found a similar issue on GitHub — now closed.

https://github.com/bpg/terraform-provider-proxmox/issues/126

It turns out that I need to also set the environment variable PROXMOX_SSH_VE_PASSWORD in addition to the PROXMOX_VE_API_TOKEN.

export PROXMOX_VE_SSH_PASSWORD='foo'

Strangely, I kept getting the SSH error. The scary part is I do not recognize x.x.x.x. So why am I trying to SSH to it?

Still, the same IP address resolution issue persisted.

Setting Environment Variables and Defining the Node

The final fix required a more explicit SSH configuration and setting environment variables. I updated my provider to:

provider "proxmox" {

insecure = true

ssh {

agent = true

node {

name = "pve"

address = "192.168.1.10"

}

}

}

This explicitly sets the node I plan to use for SSH to a specific ProxMox node. In my case, I only have one node: pve .

I also need to set the following environment variables to support the provider:

- PROXMOX_VE_ENDPOINT : The local IP Address of my ProxMox server 192.168.1.10:8006

- PROXMOX_VE_API_TOKEN : Used for control plane access by the Terraform provider.

- PROXMOX_VE_SSH_USERNAME and PROXMOX_VE_SSH_PASSWORD: Used by certain resource types in the Terraform provider.

Only after this complete configuration was the SSH connection established successfully, and the cloud-init snippet was uploaded to the synology-lab datastore.

Conclusion

Provisioning VMs on Proxmox using Terraform — especially with cloud-init — requires careful handling of storage compatibility, SSH authentication, and provider configuration. Using local-lvm or other LVM-backed datastores won’t work for file uploads like ISO images or snippets. An NFS share (like my synology-lab volume) provides a compatible and flexible option.

Furthermore, while Proxmox’s API token is useful for control plane actions, however, SSH access is mandatory for certain resource types, such as those dealing with file uploads. Explicitly defining your SSH configuration and setting the appropriate environment variables ensures smooth operation.

With the right configuration, Terraform becomes a powerful tool to automate Proxmox VM deployments, turning complex multi-step processes into reusable infrastructure-as-code.